Tree models are not just useful for point and mean forecasts.

Introduction

In the last article, we discussed how Decision Trees and Random Forests can be used for forecasting. While mean and point forecasts are the most obvious applications, they might not always be the most useful ones.

Consider the classic example of financial returns, where the conditional mean is hard, if not impossible, to predict. Conditional variance on the other hand has been shown to exert some auto-regressive properties that can be modelled. In fact there exist countless models from the (G)ARCH-family that enjoy widespread popularity.

Most GARCH models make primarily linear assumptions about the auto-regressive patterns. This begs the question if we can introduce non-linear relationships for more flexibility. Given the promising performance of tree models for mean forecasts, the roadmap is clear. Can we build respective models for variance forecasts?

Conditional variance and modelling options

Although there exists a lot of information on conditional variance models on the internet, let us quickly walk through the basics. For more insights, feel free to study the references in the GARCH wikipedia article. Alternatively, you might also get some additional insights from these two articles here and here.

To get started, we state our forecast target:

![]()

Pretty simple – we want to predict variance of a time-series based on the series’ past realizations. There is only one problem: We do not observe the time-series’ variance.

Of course, this is also true when for the time-series’ mean. However, by minimizing the mean-squared-error, our model will generate predictions for the mean (a.k.a. expected value). In a time-series modelling problem, this looks as follows:

![]()

![]() the set of all admissible models

the set of all admissible models

![]()

In words: A model that minimizes the MSE of the conditionals can be used as an estimator for the conditional mean. Of course, this requires the set of admissible models to be large enough. A linear model, for example, will fail as an approximation for a highly non-linear conditional mean.

Luckily, Decision Tree ensembles are very flexible and thus should make a decent set of candidate models.

Now hopes are up that there exists a suitable loss function that will find a similar estimator for conditional variances. Indeed there is a very convenient way:

Estimating Variance directly

This approach requires only a tiny bit of probability theory and a loose assumption on our data. Recall that the variance of any random variable can be decomposed as follows:

![]()

Notice that the expectation on the right can be estimated via the MSE minimization from before. Thus, with a respective estimator (e.g. Decision Tree ensemble), we can make use of the next formula:

![Rendered by QuickLaTeX.com \[\begin{gathered} \tilde{Y}_t\left|y_{t-1}, \ldots, y_{t-k}=Y_t\right| y_{t-1}, \ldots, y_{t-k}-\mathbb{E}\left[Y_t \mid y_{t-1}, \ldots, y_{t-k}\right] \\ \Rightarrow \mathbb{E}\left[\tilde{Y}_t \mid y_{t-1}, \ldots, y_{t-k}\right]=0, \\ \Rightarrow \operatorname{Var}\left(\tilde{Y}_t \mid y_{t-1}, \ldots, y_{t-k}\right)=\operatorname{Var}\left(Y_t \mid y_{t-1}, \ldots, y_{t-k}\right) \end{gathered}\]](https://sarem-seitz.com/wp-content/ql-cache/quicklatex.com-9af43ce7a2173848d4e6cfb92b44f7c6_l3.png)

Obviously, we don’t observe the actual conditional mean but only have our tree-based estimator at hand. Therefore:

![Rendered by QuickLaTeX.com \[\begin{gathered} \tilde{Y}_t\left|y_{t-1}, \ldots, y_{t-k}=Y_t\right| y_{t-1}, \ldots, y_{t-k}-\hat{f}^*\left(y_{t-1}, \ldots, y_{t-k}\right) \\ \Rightarrow \mathbb{E}\left[\tilde{Y}_t \mid y_{t-1}, \ldots, y_{t-k}\right] \approx 0, \\ \Rightarrow \operatorname{Var}\left(\tilde{Y}_t \mid y_{t-1}, \ldots, y_{t-k}\right) \approx \operatorname{Var}\left(Y_t \mid y_{t-1}, \ldots, y_{t-k}\right) \end{gathered}\]](https://sarem-seitz.com/wp-content/ql-cache/quicklatex.com-457eeba75d3c9a7c648ea6e636eddabc_l3.png)

Plugging this back into the variance formula, we get:

![Rendered by QuickLaTeX.com \[\begin{array}{r} \operatorname{Var}\left(\tilde{Y}_t \mid y_{t-1}, \ldots, y_{t-k}\right)=E\left[\tilde{Y}_t^2 \mid y_{t-1}, \ldots, y_{t-k}\right]-E\left[\tilde{Y}_t \mid y_{t-1}, \ldots, y_{t-k}\right]^2 \\ =E\left[\tilde{Y}_t^2 \mid y_{t-1}, \ldots, y_{t-k}\right]-0^2 \\ \Rightarrow \operatorname{Var}\left(\tilde{Y}_t \mid y_{t-1}, \ldots, y_{t-k}\right)=\operatorname{Var}\left(Y_t \mid y_{t-1}, \ldots, y_{t-k}\right) \\ =E\left[\tilde{Y}_t^2 \mid y_{t-1}, \ldots, y_{t-k}\right] \end{array}\]](https://sarem-seitz.com/wp-content/ql-cache/quicklatex.com-519ff5a3ba9eb4f022514c1551cfd150_l3.png)

This implies that the variance of our target variable equals the expectation of the squared mean-transformed variable.

Notice that we conditioned the transformed variable on the lagged original time-series. We can equally condition the transformed variable on the lagged transformed time-series. The latter is obviously much more convenient and popular. Thus, we’ll use this approach from now on.

Going back to our first equation, we can now easily build an estimator for this mean:

![Rendered by QuickLaTeX.com \[\begin{gathered} \hat{f}^{* *}=\underset{\hat{f} \in \mathcal{M}}{\operatorname{argmin}} \mathbb{E}\left[\left(\hat{f}\left(\tilde{y}_{t-1}, \ldots, \tilde{y}_{t-k}\right)-\tilde{y}_t^2\right)^2\right] \\ \Rightarrow \hat{f}^{* *}\left(\tilde{y}_{t-1}, \ldots, \tilde{y}_{t-k}\right) \approx \mathbb{E}\left[\tilde{Y}_t^2 \mid \tilde{y}_{t-1}, \ldots, \tilde{y}_{t-k}\right] \\ \quad\left(=\operatorname{Var}\left(Y_t \mid y_{t-1}, \ldots, y_{t-k}\right)\right) \end{gathered}\]](https://sarem-seitz.com/wp-content/ql-cache/quicklatex.com-de305ddc438c6ae7721c771fe533c460_l3.png)

We can now conclude the following:

If we train another model to minimize MSE on the squared transformed variable, that model becomes an estimator for the conditional variance.

Obviously, this method has one caveat: The model must only produce non-negative predictions. Otherwise, there is no guarantee that we won’t receive negative variance predictions on a test set.

This is where Decision Trees and Random Forests come in handy. The squared data for training is always non-negative. Therefore, a trained Decision Tree will only produce non-negative predictions, too.

A raw linear regression model on the other hand would not have such guarantees. Unfortunately, this also excludes Gradient Boosted Trees from the range of possible models. Standard Boosting produces a weighted sum of Decision Trees. Consequently, a single negative weight could result in negative predictions.

There is, nevertheless, a way to make Gradient Boosting work as well:

Using an adjusted loss function

Since Gradient Boosting is so powerful, it would be a bummer to not be able to use it for our problem. Luckily, most popular Boosting libraries allow to define custom loss functions. This is exactly what we will do here.

Consider again the mean-subtracted variable:

![]()

As a result we can impose the following distributional assumption:

![]()

The output from a Gradient Boosting algorithm itself can be negative. Adding exponentiation ensures non-negative variance. Now we simply need to translate the above into a log-likelihood loss function and calculate gradient and hessian. Then we plug everything into a Boosting algorithm:

![Rendered by QuickLaTeX.com \[\begin{gathered} L\left(\hat{f}_t, \tilde{y}_t\right) \\ =-0.5 \log (2 \pi)-0.5 \log \left(\exp \left(\hat{f}_t\right)\right)-\frac{\tilde{y}_t{ }^2}{2 \exp \left(\hat{f}_t\right)} \\ \text { where } \hat{f}_t=\hat{f}\left(y_{t-1}, \ldots, y_{t-k}\right) \\ \Rightarrow \frac{\partial L\left(\hat{f}_t, \tilde{y}_t\right)}{\partial \hat{f}_t}=-0.5+\frac{\tilde{y}_t{ }^2}{2 \exp (\hat{f})} \\ \Rightarrow \frac{\partial^2 L\left(\hat{f}_t, \tilde{y}_t\right)}{\partial^2 \hat{f}_t}=-\frac{\tilde{y}_t^2}{2 \exp (\hat{f})} \end{gathered}\]](https://sarem-seitz.com/wp-content/ql-cache/quicklatex.com-bdb843f14113fad3f773cdd83bdccbb7_l3.png)

Keep in mind that we need to apply the chain-rule to our additional exponential transformation.For Microsoft’s LightGBM package, our custom loss would now look as follows:

#see e.g. https://hippocampus-garden.com/lgbm_custom/

def gaussian_loss(y_pred, data):

y_true = data.get_label()

loglikelihood = -0.5*np.log(2*np.pi) - 0.5*y_pred - 0.5/np.exp(y_pred)*y_true**2

#remember that boosting minimizes the loss function but we want to maximize the loglikelihood

#thus, need to return the negative loglikelihood to the Boosting algorithm

#also applies to gradient and hessian below

return "loglike", -loglikelihood, False

def gaussian_loss_gradhess(y_pred, data):

y_true = data.get_label()

exp_pred = np.exp(y_pred)

#pay attention to the chain rule as we exp() the Boosting output before plugging it into the loglikelihood

grad = -0.5 + 0.5/exp_pred*y_true**2

hess = -0.5/exp_pred*y_true**2

return -grad, -hess

Now, let us implement both approaches and compare them against benchmark models:

Tree ensembles for volatility forecasts in Python

In general, the implementation should be straightforward. Apart from avoiding careless mistakes, there is nothing too special that be need to be aware of.

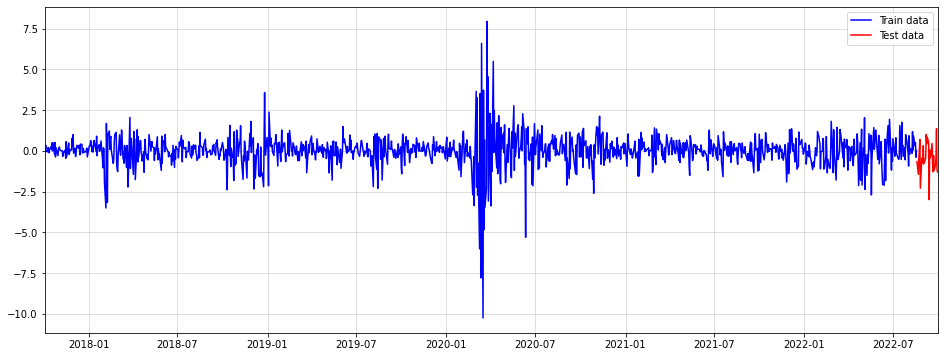

As a dataset, we’ll use five years of standardized Dow Jones log-returns:

import pandas as pd import numpy as np import yfinance as yf import matplotlib.pyplot as plt symbol = "^DJI" data = yf.download(symbol, start="2017-10-01", end="2022-10-01") returns = np.log(data["Close"]).diff().dropna() n_test = 30 train = returns.iloc[:-n_test] train_mean = train.mean() train_std = train.std() train = (train-train_mean)/train_std test = returns.iloc[-n_test:] test = (test-train_mean)/train_std #be careful to spill information over into the test period plt.figure(figsize = (16,6)) plt.plot(train, color = "blue", label = "Train data") plt.plot(test, color = "red", label = "Test data") plt.grid(alpha = 0.5) plt.margins(x=0) plt.legend()

Notice that we are performing a z-Normalization. This will make the subsequent GARCH model more robust to degenerate scaling of the time-series. Also, we reserve the last 30 days of data for a test set. Be careful not to introduce lookahead bias here.

Finally, it is reasonable to presume that conditional expected returns are always zero, i.e.

![]()

Otherwise, somebody else would have likely discovered this anomaly before us and arbitraged it away. Therefore, we don’t need to apply the mean-subtraction in the first place. Rather, we’ll directly use raw log-returns for our variance estimation.

Random Forest volatility forecasts

For the Random Forest model, we use sklearn’s RandomForestRegressor. To limit the risk of overfitting, we run the algorithm with a max_depth=3. Given the typically noisy behaviour of financial time-series, such regularization seems reasonable.

Finally, we set k=5 which lets our algorithm consider the past five observations for forecasting. While we could set this lower or higher, there is a tradeoff between omitting information and overfitting. In production, you would obviously do more backtesting to find the optimal number of lags.

In code, we now have

from sklearn.ensemble import RandomForestRegressor n_lags = 5 train_lagged = pd.concat([train**2]+[train.shift(i) for i in range(1,n_lags+1)],1).dropna() y_train = train_lagged.iloc[:,0] X_train = train_lagged.iloc[:,1:] forest_model = RandomForestRegressor(max_depth=3, n_estimators=100, n_jobs=-1, random_state=123) forest_model.fit(X_train.values, y_train.values)

To generate forecasts, we apply Monte-Carlo sampling. Then, we use those samples to estimate the 90% forecast interval. This is necessary because our model can only predict conditional variance one step ahead. Presuming Gaussian noise, this looks as follows:

from scipy.stats import norm

samp_size = 50000

Xt = pd.DataFrame(pd.concat([train.shift(i) for i in range(n_lags)],1).dropna().iloc[-1,:].values.reshape(1,-1))

Xt = pd.concat([Xt for _ in range(samp_size)])

Xt.columns = X_train.columns

np.random.seed(123)

forest_samples = []

for t in range(len(test)):

pred = forest_model.predict(Xt.values).reshape(-1,1)

samp = norm(0, 1).rvs(samp_size).reshape(-1,1)*np.sqrt(pred)

forest_samples.append(samp)

Xt = pd.DataFrame(np.concatenate([np.array(samp).reshape(-1,1),Xt.values[:,:-1]],1))

Xt.columns = X_train.columns

forest_samples_matrix = np.concatenate(forest_samples,1)

forest_std = np.std(forest_samples_matrix,0)

forest_lower = np.quantile(forest_samples_matrix,0.05,0)

forest_upper = np.quantile(forest_samples_matrix,0.95,0)

50000 samples per timestamp should suffice for now. If you need more accuracy, feel free to increase this amount by a lot.

Gradient Boosting volatility forecasts

The Gradient Boosting variant is quite similar to the Random Forest approach. We only need to take care of the LightGBM-specifics for data preprocessing. Also keep in mind that our target now needs to be the raw, z-normalized time-series, not the squared one.

import lightgbm as lgb

train_lagged = pd.concat([train]+[train.shift(i) for i in range(1,n_lags+1)],1).dropna()

y_train = train_lagged.iloc[:,0]

X_train = train_lagged.iloc[:,1:]

train_data = lgb.Dataset(X_train.values, label=y_train.values)

param = {"num_leaves":2, "learning_rate":0.1, "seed": 123}

num_round = 1000

boosted_model = lgb.train(param, train_data, num_round, fobj=gaussian_loss_gradhess, feval=gaussian_loss)

np.random.seed(123)

boosted_samples = []

for t in range(len(test)):

pred = boosted_model.predict(Xt.values).reshape(-1,1)

samp = norm(0, 1).rvs(samp_size).reshape(-1,1)*np.sqrt(np.exp(pred))

boosted_samples.append(samp)

Xt = pd.DataFrame(np.concatenate([np.array(samp).reshape(-1,1),Xt.values[:,:-1]],1))

Xt.columns = X_train.columns

boosted_samples_matrix = np.concatenate(boosted_samples,1)

boosted_std = np.std(boosted_samples_matrix,0)

boosted_lower = np.quantile(boosted_samples_matrix,0.05,0)

boosted_upper = np.quantile(boosted_samples_matrix,0.95,0)

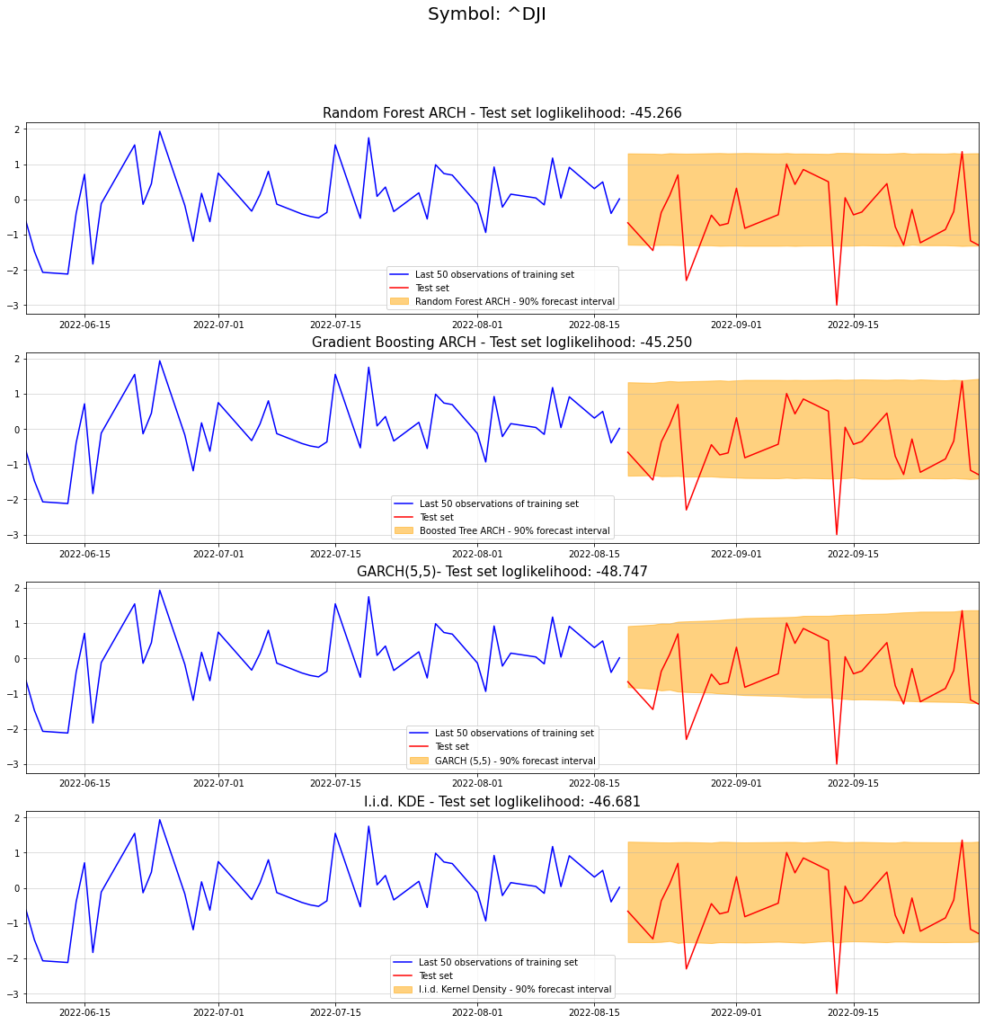

To check if our models are actually any good, let us compare them against two benchmarks:

GARCH and kernel density benchmarks and evaluation

Since we want to improve conditional volatility forecasts, GARCH seems to be the most obvious comparison. To match the number of lags in our tree ensembles, we’ll use a GARCH(5,5) model.

As a second benchmark, let us use a simple i.i.d. kernel density fit. I.e. we presume that each future observation is drawn independently from the same density as the training data:

from arch import arch_model from scipy.stats import gaussian_kde am = arch_model(train, p=n_lags,q=n_lags) res = am.fit(update_freq=5) forecasts = res.forecast(horizon=len(test), reindex=False).variance.values[0,:] garch_samples_matrix = res.forecast(horizon=len(test), simulations = 50000, reindex=False, method = "simulation").simulations.values[0,:,:] garch_std = np.std(garch_samples_matrix,0) garch_lower = np.quantile(garch_samples_matrix,0.05,0) garch_upper = np.quantile(garch_samples_matrix,0.95,0) iid_kde = gaussian_kde(train) iid_kde_samp = iid_kde.resample((50000*len(test))).reshape(50000,len(test)) kde_lower = np.quantile(iid_kde_samp,0.05,0) kde_upper = np.quantile(iid_kde_samp,0.95,0)

At last, the actual evaluation. As a performance measure, we want to use the out-of-sample log-likelihood – the higher the better. That way we will see which forecasted probability density performs best on our test set.

Since we could only sample from the forecast distributions, we’ll fit a kernel density to each of the Monte-Carlo samples. For our benchmark, we obviously have the kernel density fit from the training data already.

Finally, we calculate the log-likelihood for the kernel densities as a proxy:

benchmark_lpdfs = [iid_kde.logpdf(test[i])[0] for i in range(len(test))]

garch_lpdfs = [gaussian_kde(garch_samples_matrix[:,i]).logpdf(test[i])[0] for i in range(len(test))]

forest_lpdfs = [gaussian_kde(forest_samples_matrix[:,i]).logpdf(test[i])[0] for i in range(len(test))]

boosted_lpdfs = [gaussian_kde(boosted_samples_matrix[:,i]).logpdf(test[i])[0] for i in range(len(test))]

fig, (ax1,ax2,ax3,ax4) = plt.subplots(4,1,figsize=(19,18))

st = fig.suptitle("Symbol: "+symbol, fontsize=20)

ax1.plot(train.iloc[-50:], color = "blue", label = "Last 50 observations of training set")

ax1.plot(test, color = "red", label = "Test set")

ax1.grid(alpha = 0.5)

ax1.margins(x=0)

ax1.fill_between(test.index, forest_lower, forest_upper, color="orange", alpha=0.5, label="Random Forest ARCH - 90% forecast interval")

ax1.legend()

ax1.set_title("Random Forest ARCH - Test set loglikelihood: {}".format(str(np.sum(forest_lpdfs))[:7]), fontdict={'fontsize': 15})

ax2.plot(train.iloc[-50:], color = "blue", label = "Last 50 observations of training set")

ax2.plot(test, color = "red", label = "Test set")

ax2.grid(alpha = 0.5)

ax2.margins(x=0)

ax2.fill_between(test.index, boosted_lower, boosted_upper, color="orange", alpha=0.5, label="Boosted Tree ARCH - 90% forecast interval")

ax2.legend()

ax2.set_title("Gradient Boosting ARCH - Test set loglikelihood: {}".format(str(np.sum(boosted_lpdfs))[:7]), fontdict={'fontsize': 15})

ax3.plot(train.iloc[-50:], color = "blue", label = "Last 50 observations of training set")

ax3.plot(test, color = "red", label = "Test set")

ax3.grid(alpha = 0.5)

ax3.margins(x=0)

ax3.fill_between(test.index, garch_lower, garch_upper, color="orange", alpha=0.5, label="GARCH (5,5) - 90% forecast interval")

ax3.legend()

ax3.set_title("GARCH(5,5)- Test set loglikelihood: {}".format(str(np.sum(garch_lpdfs))[:7]), fontdict={'fontsize': 15})

ax4.plot(train.iloc[-50:], color = "blue", label = "Last 50 observations of training set")

ax4.plot(test, color = "red", label = "Test set")

ax4.grid(alpha = 0.5)

ax4.margins(x=0)

ax4.fill_between(test.index, kde_lower, kde_upper, color="orange", alpha=0.5, label="I.i.d. Kernel Density - 90% forecast interval")

ax4.legend()

ax4.set_title("I.i.d. KDE - Test set loglikelihood: {}".format(str(np.sum(benchmark_lpdfs))[:7]), fontdict={'fontsize': 15})

In fact, our tree models perform best for our 30 day test set. Surprisingly, the GARCH model performed worse than our kernel density benchmark. Keep in mind though that a single evaluation doesn’t allow any generalizing conclusions.

Nevertheless, our Random Forest and Gradient Boosting ARCH models appear to work reasonably well.

Conclusion

This article gave a quick demonstration of how tree ensembles can be used for volatility forecasts. Although the example models could possibly be enhanced a lot, the initial ideas already seem to work quite well.

Possible enhancement could be a better choice of conditional distributions. I.e. all our models, except the kernel density benchmark, worked with Gaussianity assumptions. In for financial time-series, this might obviously be a sub-optimal choice. If, for example, we wanted to account for heavy conditional tails, a Student’s T-distribution would be better suited.

PS: Notebook for this article is available on GitHub.

References

[1] Breiman, Leo. Random forests. Machine learning, 2001, 45.1, p. 5-32.

[2] Bollerslev, Tim. Modelling the coherence in short-run nominal exchange rates: a multivariate generalized ARCH model. The review of economics and statistics, 1990, p. 498-505.

[3] Ke, Guolin, et al. Lightgbm: A highly efficient gradient boosting decision tree. Advances in neural information processing systems, 2017, 30

Leave a Reply